A stock trading company handles millions of trades a day and requires excellent performance and reliability within its stock trading system. The company operates a number of event-driven APIs Implemented as Mule applications that are hosted on various customer-hosted Mule clusters and needs to enable message exchanges between the APIs within their internal network using shared message queues.

What is an effective way to meet the cross-cluster messaging requirements of its event-driven APIs?

According to MuleSoft, what Action should an IT organization take regarding its technology assets in order to close the IT delivery.

A mule application is being designed to perform product orchestration. The Mule application needs to join together the responses from an inventory API and a Product Sales History API with the least latency.

To minimize the overall latency. What is the most idiomatic (used for its intended purpose) design to call each API request in the Mule application?

What metrics about API invocations are available for visualization in custom charts using Anypoint Analytics?

A company is designing a mule application to consume batch data from a partner's ftps server The data files have been compressed and then digitally signed using PGP.

What inputs are required for the application to securely consumed these files?

An architect is designing a Mule application to meet the following two requirements:

1. The application must process files asynchronously and reliably from an FTPS server to a back-end database using VM intermediary queues for

load-balancing Mule events.

2. The application must process a medium rate of records from a source to a target system using a Batch Job scope.

To make the Mule application more reliable, the Mule application will be deployed to two CloudHub 1.0 workers.

Following MuleSoft-recommended best practices, how should the Mule application deployment typically be configured in Runtime Manger to best

support the performance and reliability goals of both the Batch Job scope and the file processing VM queues?

An organization plans to migrate its deployment environment from an onpremises cluster to a Runtime Fabric (RTF) cluster. The on-premises Mule applications are currently configured with persistent object stores.

There is a requirement to enable Mule applications deployed to the RTF cluster to store and share data across application replicas and through restarts of the entire RTF cluster,

How can these reliability requirements be met?

A company is planning to migrate its deployment environment from on-premises cluster to a Runtime Fabric (RTF) cluster. It also has a requirement to enable Mule applications deployed to a Mule runtime instance to store and share data across application replicas and restarts.

How can these requirements be met?

An organization is creating a set of new services that are critical for their business. The project team prefers using REST for all services but is willing to use SOAP with common WS-" standards if a particular service requires it.

What requirement would drive the team to use SOAP/WS-* for a particular service?

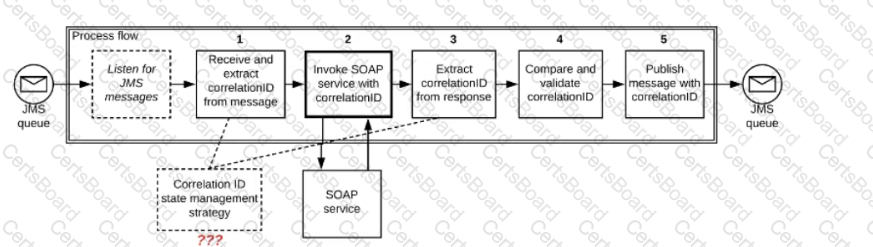

Refer to the exhibit.

A Mule application is deployed to a multi-node Mule runtime cluster. The Mule application uses the competing consumer pattern among its cluster replicas to receive JMS messages from a JMS queue. To process each received JMS message, the following steps are performed in a flow:

Step l: The JMS Correlation ID header is read from the received JMS message.

Step 2: The Mule application invokes an idempotent SOAP webservice over HTTPS, passing the JMS Correlation ID as one parameter in the SOAP request.

Step 3: The response from the SOAP webservice also returns the same JMS Correlation ID.

Step 4: The JMS Correlation ID received from the SOAP webservice is validated to be identical to the JMS Correlation ID received in Step 1.

Step 5: The Mule application creates a response JMS message, setting the JMS Correlation ID message header to the validated JMS Correlation ID and publishes that message to a response JMS queue.

Where should the Mule application store the JMS Correlation ID values received in Step 1 and Step 3 so that the validation in Step 4 can be performed, while also making the overall Mule application highly available, fault-tolerant, performant, and maintainable?