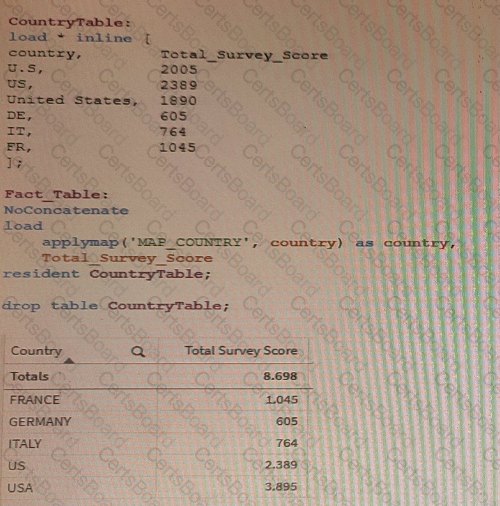

On executing a load script of an app, the country field needsto be normalized.The developer uses a mapping table to address the issue.

What should the data architect do?

A data architect of an organization that has implemented Qlik Sense on Windows needs to load large amounts of data from a database that is continuously updated

New records are added, and existing records get updated and deleted. Each record has a LastModified field.

All existing records are exported into a QVD file. The data architect wants to load the records into Qlik Sense efficiently.

Which steps should the data architect take to meet these requirements?

Refer to the exhibit.

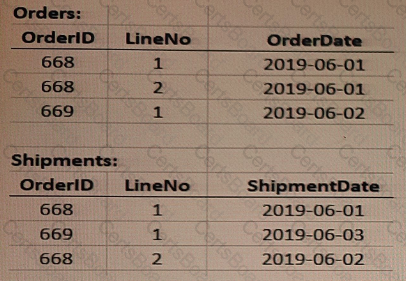

A data architect is loading the tables and a synth key is generated.

How should the data architect resolve the synthetic key?

The Marketing department is using some similar KPIs in different apps that need to be modified frequently according to the business needs. The KPIs are created using master items with the same expression.

Which method should the data architect use to manage the modifications in all apps?

A company generates 1 GB of ticketing data daily.The data is stored in multiple tables Business users need to see trends oftickets processed for the past.2 years Users very rarely access the transaction-level data for a specific date.Only the past 2 years of data must be loaded which is 720 GB of data

Which method should a data architect use to meet these requirements?

A data architect needs to load data from two different databases Additional data will be added from a folder that contains QVDs. text files, and Excel files.

What is the minimum number of data connections required?

A data architect needs to upload data from ten different sources, but only if there are any changes after the last reload When data is updated, a new file is placed into a folder mapped to E A439926003 The data connection points to this folder.

The data architect plans a script which will:

1.Verify that the file exists

2. If the file exists, upload it Otherwise, skip to the next piece of code

The script will repeat this subroutine for each source. When the script ends, all uploaded files will be removed with a batch procedure.

Which option should the data architect use to meet these requirements?